智能文章系统实战-数据采集(3)

发布于:2018-6-19 18:26 作者:admin 浏览:2369<?php

require_once('./inc.php');

//利用正则分析获取页面内容中相关的标题和内容。完成数据采集。

//采集的页数

$p=1;

//循环采集

for($i=1;$i<=$p;$i++)

{

//采集的列表地址(此地址仅供演示学习用)

$url='http://feed.mix.sina.com.cn/api/roll/get?pageid=153&lid=2509&k=&num=50&page='.$i;

//获取内容

$fileContent=file_get_contents($url);

//解析JSON

$jsonArray=json_decode($fileContent,true);

//获取列表数据

$dataList=$jsonArray['result']['data'];

if($dataList)

{

foreach($dataList as $key => $row)

{

$wapurl=$row['wapurl'];

//获取页面的HTML源码

$content=file_get_contents($wapurl);

//获取标题

preg_match('|<h1 class="art_tit_h1">(.*)</h1>|Uis',$content,$match);

$title=$match[1];

//获取类别

preg_match('|<h2 class="hd_tit_l">(.*)</h2>|Uis',$content,$match);

$category=preg_replace('/(\s+)/',' ',trim(strip_tags($match[1])));

//获取内容

preg_match('|<!--标题_e-->(.*)<div id=\'wx_pic\' style=\'margin:0 auto;display:none;\'>|Uis',$content,$match);

//过滤内容中多余的标签

$content=trim($match[1]);

$content=preg_replace("|<section(.*)</section>|Uis","",$content);

$content=preg_replace('|<h2 class="art_img_tit">(.*)</h2>|Uis',"",$content);

//过滤内容中多余的图片

preg_match_all('|<img (.*) src="(.*)" data-src="(.*)" (.*)>|Uis',$content,$imgMatch);

if($imgMatch[0])

{

foreach($imgMatch[0] as $imgKey => $img)

{

if(strpos($imgMatch[4][$imgKey],'style="display:none"')==true)

{

$content=str_replace($img,"",$content);

}

else

{

$content=str_replace($img,"<img src='".$imgMatch[3][$imgKey]."' ".$imgMatch[4][$imgKey]."/>",$content);

}

}

}

//把符合条件的数据存入数据库,完成数据采集

$times=time();

if($title && $content && strlen($title)>3 && strlen($content)>100)

{

//插入数据库

$sql="INSERT INTO article SET `title`='".addslashesObj($title)."',`content`='".htmlspecialcharsObj(addslashesObj($content))."',`times`='".$times."'";

$ret=$db->query($sql);

}

}

}

}

?>

总结:采用PHP正则分析网页源代码获取相关内容,把解析的内容插入数据库中。

samba的使用

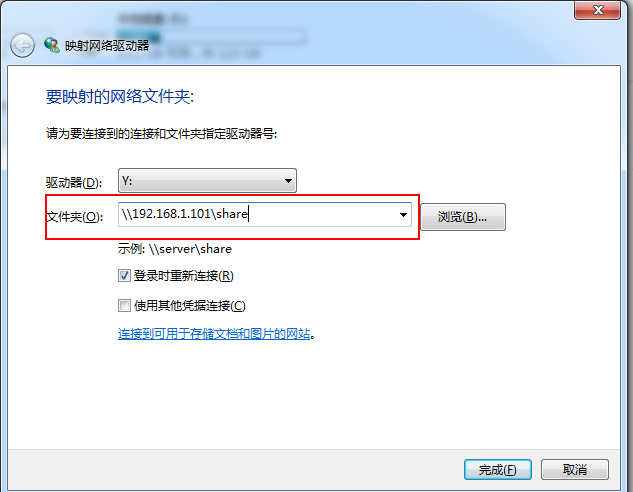

发布于:2018-6-19 16:53 作者:admin 浏览:1864 分类:Linuxsamba的使用 1.samba的安装 #yum install samba samba-common smbclient 2.新建共享用户和路径 #mkdir /usr/local/sambashare #groupadd sambasharegroup #useradd -g sambasharegroup sambashareuser #smbpasswd -a sambashareuser #chown -R sambashareuser:sambasharegroup /usr/local/sambashare 3.配置samba。 #vi /etc/samba/smb.conf #共享IP:192.168.1.101, 共享名:share, 共享路径:/usr/local/sambashare, #共享用户需要输入的用户sambashareuser 和 刚才设置sambashareuser密码. [share] comment = share path = /usr/local/sambashare browseable = yes writable = yes available = yes valid users = sambashareuser4.windows使用计算机->映射网络驱动器->文件夹:输入 \\liunx的IP地址\共享名 [共享地址]。即可使用。当做本地一个磁盘,用本地的编辑器操作linux的文件

访问nginx 遇到 Permission denied 的解决方案

发布于:2018-6-15 14:43 作者:admin 浏览:3432 分类:错误汇总connect() to 127.0.0.1:9000 failed (13: Permission denied) while connecting to upstream, client: 127.0.0.1, server:

#setsebool -P httpd_can_network_connect 1

临时关闭SELinux:不需要重新启动机器

#setenforce 0

关闭SELinux:然后重启机器

#vi /etc/selinux/config

将SELINUX=enforcing 改为 SELINUX=disabled

智能文章系统实战-PHP现实的文章增删改查,MVC架构(2)

发布于:2018-6-15 13:14 作者:admin 浏览:25651. 核心公共文件 inc.php

<?php

@session_start();

header("Content-Type:text/html;charset=utf-8");

error_reporting(E_ALL & ~E_NOTICE);

//定义常量和变量

//定义方法

//输出JSON

function showJson($errCode=0,$errMsg='',$data=array())

{

echo json_encode(array('errCode'=>$errCode,'errMsg'=>$errMsg,'data'=>$data));

}

//URL跳转

function gotoURL($url='',$msg='',$sec=2)

{

if(!empty($url))

{

if($sec>0)

{

header("refresh:$sec;url={$url}");

}

else

{

header("Location:{$url}");

}

}

if(!empty($msg))

{

print($msg);

}

}

//返回在预定义字符之前添加反斜杠的字符串,该函数可用于为存储在数据库中的字符串以及数据库查询语句准备字符串

function addslashesObj($data)

{

if(get_magic_quotes_gpc ()==0)

{

return $data;

}

else

{

if(is_array($data))

{

foreach($data as $k=>$v)

{

$data[$k]=addslashesObj($v);

}

}

else

{

$data=addslashes($data);

}

return $data;

}

}

//把预定义的字符转换为 HTML 实体

function htmlspecialcharsObj($data)

{

if(is_array($data))

{

foreach($data as $k=>$v)

{

$data[$k]=htmlspecialcharsObj($v);

}

}

else

{

$data=addslashes($data);

}

return $data;

}

//简易模板解析

function parseTemplate($fileName)

{

global $TEMPLATE;

include $_SERVER['DOCUMENT_ROOT'].$fileName;

}

//数据库

$DBConfig=array(

'host'=>'127.0.0.1', //数据库主机IP

'user'=>'root', //数据库用户名

'password'=>'', //数据库密码

'dbname'=>'article', //数据库名称

'dbcharset'=>'UTF8', //数据库字符编码

);

//采用mysqli方式连接数据库

$db = new mysqli($DBConfig['host'],$DBConfig['user'],$DBConfig['password'],$DBConfig['dbname']);

$db->query("SET NAMES {$DBConfig['dbcharset']}");

2.文章的增删改查(逻辑模板分离) 逻辑部分 admin.php

<?php

require_once('./inc.php');

//文章的增删改查

//验证登录

if(!($_SERVER['PHP_AUTH_USER']=='admin' && $_SERVER['PHP_AUTH_PW']='123456'))

{

echo '密码错误';

header('HTTP/1.1 401 Unauthorized');

header('WWW-Authenticate: Basic realm="Auth"');

exit();

}

$action=addslashesObj($_REQUEST['action']);

switch($action)

{

//文章增加模板

case 'add':

parseTemplate('/views/admin/article/add.php');

break;

//文章增加处理

case 'addOK':

//获取参数

$title=addslashesObj($_REQUEST['title']);

$content=addslashesObj($_REQUEST['content']);

$times=time();

//插入数据库

$sql="INSERT INTO article SET `title`='".$title."',`content`='".$content."',`times`='".$times."'";

$ret=$db->query($sql);

if($ret)

{

gotoURL('admin.php',"发布成功");

}

else

{

gotoURL('admin.php',"发布失败");

}

break;

//文章编辑模板

case 'edit':

//获取参数

$id=intval($_REQUEST['id']);

$page=intval($_REQUEST['page']);

//获取数据

$sql="SELECT * FROM article WHERE id='".$id."'";

$result=$db->query($sql);

if($result && $info=$result->fetch_array())

{

$TEMPLATE['info']=$info;

$TEMPLATE['page']=$page;

parseTemplate('/views/admin/article/edit.php');

}

else

{

gotoURL('admin.php',"信息不存在");

}

break;

//文章编辑处理

case 'editOK':

//获取参数

$id=intval($_REQUEST['id']);

$page=intval($_REQUEST['page']);

$title=addslashesObj($_REQUEST['title']);

$content=addslashesObj($_REQUEST['content']);

$update_time=time();

//插入数据库

$sql="UPDATE article SET `title`='".$title."',`content`='".$content."',`update_time`='".$update_time."' WHERE id='".$id."'";

$ret=$db->query($sql);

if($ret)

{

gotoURL('admin.php?action=list&page='.$page,"修改成功");

}

else

{

gotoURL('admin.php?action=list&page='.$page,"修改失败");

}

break;

//文章删除处理

case 'deleteOK':

//获取参数

$id=intval($_REQUEST['id']);

$page=intval($_REQUEST['page']);

//插入数据库

$sql="DELETE FROM article WHERE id='".$id."'";

$ret=$db->query($sql);

if($ret)

{

gotoURL('admin.php?action=list&page='.$page,"删除成功");

}

else

{

gotoURL('admin.php?action=list&page='.$page,"删除失败");

}

break;

//文章查看处理

case 'show':

//获取参数

$id=intval($_REQUEST['id']);

//插入数据库

$sql="SELECT * FROM article WHERE id='".$id."'";

$result=$db->query($sql);

if($result && $info=$result->fetch_array())

{

$TEMPLATE['info']=$info;

parseTemplate('/views/admin/article/show.php');

}

else

{

gotoURL('admin.php',"信息不存在");

}

break;

//文章列表

default:

$sql="SELECT count(*) as num FROM article";

$result=$db->query($sql);

$info=$result->fetch_array();

$allnum=intval($info['num']);

$size=10;

$page=intval($_REQUEST['page']);

$maxpage=ceil($allnum/$size);

if($page>$maxpage)

{

$page=$maxpage;

}

if($page<=0)

{

$page=1;

}

$offset=($page-1)*$size;

$datas=array();

$sql="SELECT * FROM article ORDER BY id DESC LIMIT {$offset},{$size}";

$result=$db->query($sql);

while($result && $info=$result->fetch_array())

{

$datas[]=$info;

}

$TEMPLATE['list']=$datas;

$TEMPLATE['page']=$page;

parseTemplate('/views/admin/article/list.php');

break;

}

3.模板(仅仅是列表部分,其它模板自行实现) 文件名 ./views/admin/article/list.php

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8">

<meta http-equiv="X-UA-Compatible" content="IE=edge">

<meta name="viewport" content="width=device-width, initial-scale=1">

<meta name="description" content="">

<meta name="author" content="">

<title>智能文章管理平台</title>

<link href="/css/bootstrap.3.0.0.united.css" rel="stylesheet">

<script src="/js/jquery-1.11.2.min.js"></script>

<script src="/js/bootstrap.min.js"></script>

</head>

<body>

<p>

<a class="btn btn-sm btn-primary" href="?action=add" >添加管理员</a>

</p>

<table class="table table-striped table-hover ">

<thead>

<tr>

<th>ID</th>

<th>标题</th>

<th>操作</th>

</tr>

</thead>

<tbody>

<?php

foreach($TEMPLATE['list'] as $key =>$row)

{

?>

<tr>

<td><?=$row['id']?></td>

<td><?=$row['title']?></td>

<td>

<a href="?action=edit&id=<?=$row['id']?>">编辑</a>

<a href="?action=show&id=<?=$row['id']?>">查看</a>

<a class="mal10" href="javascript:confirmDelete('<?=$row['id']?>');">删除</a>

</td>

</tr>

<?php

}

?>

</tbody>

</table>

<nav align="right"><ul class="pagination"><li><a href="?action=list&page=<?=$TEMPLATE['page']-1?>">首页</a></li><li><a href="?action=list&page=<?=$TEMPLATE['page']-1?>">«</a></li><li class="active"><a href="javascript:void(0)"><?=$TEMPLATE['page']?></a></li><li><a href="?action=list&page=<?=$TEMPLATE['page']+1?>">»</a></li></ul></nav>

</body>

</html>

<script>

function confirmDelete(id)

{

if(confirm("确定要删除吗?")==true)

{

location.href='?action=deleteOK&id='+id;

}

}

</script>

4. 总结

最简单的MVC架构.

按照最小的系统设计,仅仅实现了管理后台的增删该查。以及一个列表的模板页,其它的模板页自行解决。 同时在安全,架构等方面没做过多设计。如表单输入验证,安全验证,数据库读写分离,文件上传等未实现。权限控制仅仅用401权限验证实现。 实现可一个最简单的模板分离。

智能文章系统实战-创建数据库(1)

发布于:2018-6-15 10:38 作者:admin 浏览:26221.安装MariaDB数据库

#yum install mariadb mariadb-server mariadb-devel #安装 #systemctl start mariadb #启动服务

2.创建数据库article

[root@localhost ~]# mysql Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 379 Server version: 5.5.56-MariaDB MariaDB Server Copyright (c) 2000, 2017, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> CREATE DATABASE article DEFAULT CHARACTER SET utf8 COLLATE utf8_general_ci; Query OK, 1 row affected (0.02 sec)

MariaDB [(none)]> use article; Database changed

MariaDB [article]> CREATE TCREATE TABLE `article` ( `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '文章ID', `title` varchar(255) NOT NULL DEFAULT '' COMMENT '文章标题', `content` text NOT NULL COMMENT '文章内容', `times` int(11) NOT NULL DEFAULT '0' COMMENT '发布时间', PRIMARY KEY (`id`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8;

Query OK, 0 rows affected (0.05 sec)

MariaDB [article]> select * from article; Empty set (0.00 sec) MariaDB [article]>

备注:按照最简单的系统的设计。所以字段未包含 分类,关键词,导语,文章审核状态等字段。

hive安装

发布于:2018-6-11 14:41 作者:admin 浏览:2488 分类:系统架构环境要求

Java jdk1.8.0_17 Hadoop 2.9.1 Hive-2.3.3 Mysql 5.5.56 MySQL驱动:mysql-connector-java.jar

#wget http://mirror.bit.edu.cn/apache/hive/hive-2.2.0/apache-hive-2.2.0-bin.tar.gz #tar -xzvf apache-hive-2.2.0-bin.tar.gz #mv apache-hive-2.3.3-bin /usr/local/soft/hive #cp mysql-connector-java.jar /usr/local/soft/hive/lib/

#vi /etc/profile

export HIVE_HOME=/usr/local/soft/hive

export PATH=${HIVE_HOME}/bin:$PATH

export CLASSPATH=.:${HIVE_HOME}/lib:$CLASSPATH

MYSQL数据库设置(元数据库)

#CREATE DATABASE hive DEFAULT CHARACTER SET utf8 COLLATE utf8_general_ci; #GRANT ALL PRIVILEGES ON hive.* TO 'hive'@'%' IDENTIFIED BY 'hive' WITH GRANT OPTION; #FLUSH PRIVILEGES;

Hive配置文件

#cp hive-default.xml.template hive-site.xml #vi hive-site.xml <configuration> <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true</value> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>hive</value> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>hive</value> </property> </configuration>

初始化HIVE数据库

#/usr/local/soft/hive/bin/schematool -dbType mysql -initSchema

启动Hive

#/usr/local/soft/hive/bin/hive

#hive> show databases;

#hive> create database wordcount;

#hive> use wordcount;

#hive> create table words(name string,num int);

#hive> insert into words(name,num) values('hello',2);

#hive> insert into words(name,num) values('world',1);

#hive> insert into words(name,num) values('hello',1);

#hive> select * from words;

#hive> select name,sum(num) from words group by name;

查看Hive文件和Mysql数据的元数据库hive.

mysql>select * from hive.DBS;

Hadoop实战-PHP-MapReduce

发布于:2018-6-8 8:51 作者:admin 浏览:2426 分类:系统架构1. 编写Mapper的代码

#vi WordMap.php

#!/usr/bin/php

<?php

while (($line = fgets(STDIN)) !== false)

{

$words = preg_split('/(\s+)/', $line);

foreach ($words as $word)

{

echo $word."\t"."1".PHP_EOL;

}

}

?>

2.编写Reducer的代码

#vi WordReduce.php

#!/usr/bin/php

<?php

$result=array();

while (($line = fgets(STDIN)) !== false)

{

$line = trim($line);

list($k, $v) = explode("\t", $line);

$result[$k] += $v;

}

ksort($result);

foreach($result as $word => $count)

{

echo $word."\t".$count.PHP_EOL;

}

?>

3.运行WordMapReduce

#chmod 0777 WordMap.php #chmod 0777 WordReduce.php #bin/hadoop jar share/hadoop/tools/lib/hadoop-streaming-2.9.1.jar -mapper WordMap.php -reducer WordReduce.php -input HdfsInput/* -output HdfsOutput

4.查看运行结果

#hadoop fs -ls HdfsOutput #hadoop fs -cat HdfsOutput/* #hadoop fs -get HdfsOutput LocalOutput #cat LocalOutput/*

Hadoop实战-MapReduce

发布于:2018-6-7 12:41 作者:admin 浏览:2147 分类:系统架构

Hadoop实战-环境搭建

http://www.wangfeilong.cn/server/114.html

Hadoop实战-MapReduce

1. 编写Mapper的代码

#vi WordMap.java

import java.io.IOException;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

//实现Mapper

public class WordMap extends Mapper<LongWritable, Text, Text, LongWritable>{

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, LongWritable>.Context context)

throws IOException, InterruptedException {

//读取到的一行字符串,按照空格分割单词

String line = value.toString();

String[] words = line.split(" ");

for (String word : words) {

//将分割的单词word输出为key,次数输出为value,次数为1,这行数据会输到reduce中,

context.write(new Text(word), new LongWritable(1));

}

}

}

2.编写Reducer的代码

#vi WordReduce.java

import java.io.IOException;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

//实现Reducer

public class WordReduce extends Reducer<Text, LongWritable, Text, LongWritable> {

@Override

/**

* 第一个Text: 是传入的单词名称,是Mapper中传入的

* 第二个:LongWritable 是该单词出现了多少次,这个是mapreduce计算出来的

* 第三个Text: 是输出单词的名称 ,这里是要输出到文本中的内容

* 第四个LongWritable: 是输出时显示出现了多少次,这里也是要输出到文本中的内容

*/

protected void reduce(Text key, Iterable<LongWritable> values,

Reducer<Text, LongWritable, Text, LongWritable>.Context context) throws IOException, InterruptedException {

//累加统计

long count = 0;

for (LongWritable num : values) {

count += num.get();

}

context.write(key, new LongWritable(count));

}

}

3.编写main方法执行这个MapReduce

#vi WordMapReduce.java

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

//实现MapReduce

public class WordMapReduce{

public static void main(String[] args) throws IOException {

Configuration conf = new Configuration();

//如果是打包在linux上运行,则不需要写这两行代码

/*

//指定运行在yarn中

conf.set("mapreduce.framework.name", "yarn");

//指定resourcemanager的主机名

conf.set("yarn.resourcemanager.hostname", "localhost");

*/

Job job = Job.getInstance(conf);

//使得hadoop可以根据类包,找到jar包在哪里

job.setJarByClass(WordMapReduce.class);

//指定Mapper的类

job.setMapperClass(WordMap.class);

//指定reduce的类

job.setReducerClass(WordReduce.class);

//设置Mapper输出的类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(LongWritable.class);

//设置最终输出的类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

//指定输入文件的位置,这里为了灵活,接收外部参数

FileInputFormat.setInputPaths(job, new Path(args[0]));

//指定输入文件的位置,这里接收启动参数

FileOutputFormat.setOutputPath(job, new Path(args[1]));

//将job中的参数,提交到yarn中运行

//job.submit();

try {

job.waitForCompletion(true);

//这里的为true,会打印执行结果

} catch (ClassNotFoundException | InterruptedException e) {

e.printStackTrace();

}

}

}

4. 编译WordMapReduce

注意环境变量

#export CLASSPATH=.:/usr/local/soft/jdk1.8.0_171/lib:/usr/local/soft/jdk1.8.0_171/jre/lib:$(/usr/local/soft/hadoop/bin/hadoop classpath):$CLASSPATH

编译

#javac WordMap.java

#javac WordReduce.java

#javac WordMapReduce.java

5.打包 WordMap、WordReduce、WordMapReduce的class打包

#jar cvf WordMapReduce.jar Word*.class

6.运行WordMapReduce

#hadoop jar WordMapReduce.jar WordMapReduce HdfsInput HdfsOutput

7.查看运行结果

#hadoop fs -ls HdfsOutput

#hadoop fs -cat HdfsOutput/*

#hadoop fs -get HdfsOutput LocalOutput

#cat LocalOutput/*

Hadoop实战-环境搭建

发布于:2018-6-7 10:55 作者:admin 浏览:2417 分类:系统架构Hadoop实战(1)-环境搭建

1.准备的软件

centos7

SSH

Java 1.8.0_171

Hadoop 2.9.1

2.安装SSH

#yum install openssh-server openssh-clients

3.新建软件安装目录

#mdkir -p /usr/local/soft/

#cd /usr/local/soft/

3.安装Java Java 1.8.0_171

3.1. 下载

#wget http://download.oracle.com/otn-pub/java/jdk/8u171-b11/512cd62ec5174c3487ac17c61aaa89e8/jdk-8u171-linux-x64.tar.gz?AuthParam=1528334282_eed030b012c430a6a5d6cebcfd2ff96f

3.2. 解压

#tar –zvxf jdk-8u171-linux-x64.tar.gz

3.3. 设置环境变量

#vi /etc/profile

export JAVA_HOME=/usr/local/soft/jdk1.8.0_171

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH

3.4.环境变量生效

#source /etc/profile

4. 安装 Hadoop 2.9.1

4.1. 下载

#wget https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-2.9.1/hadoop-2.9.1.tar.gz

4.2 解压

#tar –zvxf hadoop-2.9.1.tar.gz

4.3 更改文件名为hadoop

#mv hadoop-2.9.1 hadoop

4.4. 设置环境变量

#vi /etc/profile

export Hadoop_HOME=/usr/local/soft/hadoop

export PATH=${HADOOP_HOME}/bin:${HADOOP_HOME}/sbin:$PATH

export CLASSPATH=$($HADOOP_HOME/bin/hadoop classpath):$CLASSPATH

4.5.环境变量生效

#source /etc/profile

5. Hadoop单机模式

5.1 Hadoop单机模式配置

#cd /usr/local/soft/hadoop/

#vi etc/hadoop/hadoop-env.sh

export JAVA_HOME=/usr/local/soft/jdk1.8.0_171

5.2 Hadoop单机模式实战

#mkdir LocalInput

#cp etc/hadoop/*.xml LocalInput

#bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.9.1.jar grep LocalInput LocalOutput 'dfs[a-z.]+'

#cat LocalOutput/*

6. SSH免登录设置

# ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

# cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

# chmod 0600 ~/.ssh/authorized_keys

# ssh localhost

7 Hadoop伪分布模式

7.1 Hadoop伪分布模式配置

7.1.1 #vi etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

7.1.2 #vi etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

7.1.3 #vi etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

7.1.4 #vi etc/hadoop/yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

7.2 Hadoop伪分布模式启动和查看

7.2.1 格式化文件系统

# bin/hdfs namenode -format

7.2.2 启动 NameNode 、DataNode 、ResourceManager 、NodeManager

#sbin/start-all.sh

7.2.3 查看监控页面

http://localhost:50070/ (查看NameNode)

http://localhost:8088/ (查看ResourceManager)

7.3 Hadoop伪分布模式文件上传和下载

7.3.1新建执行 MapReduce jobs 需要的目录

#bin/hdfs dfs -mkdir /user

#bin/hdfs dfs -mkdir /user/root

7.3.2 本地文件上传到HDFS和从HDFS下载本地

新建本地文件

#cd LocalInput

#vi F1.txt

Hello World

Hello Hadoop

#vi F2.txt

Hello JAVA

JAVA 是 一门 面向对象 编程 语言

把本地文件上传到HDFS

#bin/hdfs dfs -put LocalInput/*.txt HdfsInput

查看HDFS文件

#bin/hdfs dfs -ls HdfsInput

把HDFS文件下载到本地LocalOutput

#bin/hdfs dfs -get HdfsInput LocalOutput

查看已经下载到本地的文件

#ls -l LocalOutput

7.4 Hadoop伪分布模式停止

#sbin/stop-all.sh

Hadoop实战(2)-MapReduce

http://www.wangfeilong.cn/server/115.html

Hadoop实战(3)-PHP-MapReduce

http://www.wangfeilong.cn/server/116.html